Why Quality Instability Persists in Fine Chemical and Pharma Manufacturing

The problem of inconsistent quality continues to plague fine chemical and pharmaceutical manufacturing because of several basic problems. For starters, there's the issue with raw materials varying so much from supplier to supplier and batch to batch. Even small differences in composition can throw off reactions completely and lead to different impurities showing up in products. Then we have these complicated manufacturing processes with dozens of steps. Tiny mistakes happen all along the way - like when temperatures aren't just right during synthesis or humidity levels change in crystallization chambers. Traditional quality checks done after production usually miss these little errors until it's too late. Most companies still operate reactively, waiting until batches are finished before checking for problems. By then, those tiny issues have already multiplied into major headaches. When plant managers finally get lab results days later, they're forced to make manual corrections that often come too late. This approach leads to expensive recalls averaging around $740,000 each according to Ponemon Institute data from last year. All these challenges become even more critical in industries where regulatory compliance depends on absolute precision. To fix this mess, manufacturers need smarter chemical engineering approaches that replace our current stop-start quality control methods with something that monitors everything continuously in real time.

How Intelligent Chemical Engineering Solution Enables Real-Time Quality Stabilization

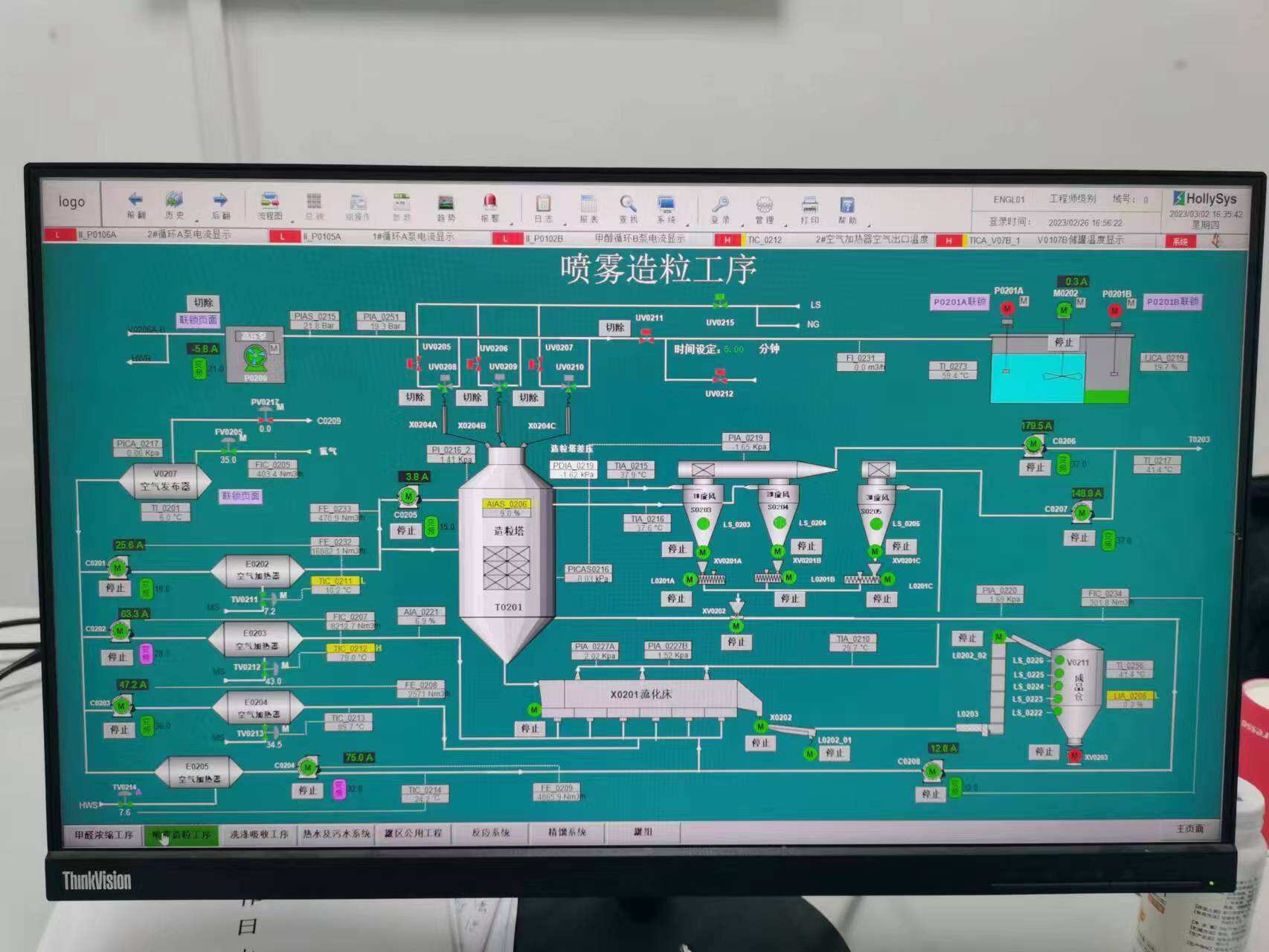

Closed-Loop Integration of AI, IIoT, and Digital Twins

Closed loop systems bring together AI, IIoT sensors, and digital twin technology to keep manufacturing quality stable right away. The IIoT sensors monitor things like reactor temps, pressure levels, and chemical makeup, sending thousands upon thousands of data points every single minute to either cloud servers or local processing units. These digital twins then run simulations based on actual physical properties to spot problems with product purity or yield before they get too far off track from what's acceptable. When the AI spots something wrong, say when catalysts start breaking down over time, it can tweak feed rates or adjust cooling settings within half a second flat. This kind of quick reaction keeps batches from failing because molecules stay stable without waiting for someone to notice and fix things manually. For pharma companies, this integration really makes a difference. They cut down on those annoying offline quality checks by about three quarters and manage to avoid roughly one out of five situations where equipment needs fixing after going wrong.

Adaptive ML Control in API Synthesis: A 73% Reduction in Impurity Drift

ML controllers for pharmaceutical manufacturing keep getting better at optimizing API synthesis as they continuously tweak process parameters. When it comes to crystallization steps, these smart systems look at things like solvent ratios and how crystals form compared to past data on impurities. They'll adjust how much antisolvent gets injected if there's a risk of unwanted crystal forms showing up. A recent example shows just how effective this can be: one plant saw tetrahydrofuran solvent levels drop by nearly three quarters after implementing adaptive machine learning over just three batches. What makes this work so well is that the algorithms actually change how long materials stay in the crystallizer based on what they see from sensors monitoring particle sizes in real time. This kind of tight control means finished products reliably pass those tough pharmacopeia tests like USP <467> requirements without needing expensive rework. Manufacturers making drugs for high blood pressure have reported cutting rejected batches anywhere between half and almost all thanks to these smarter processes, plus they're able to run their facilities closer to maximum capacity year after year.

Predictive Analytics: From Reactive QC to Proactive Specification Compliance

In chemical manufacturing, traditional quality control often works reactively. Companies test finished product batches against specs only after everything has been produced. The problem? There's usually a delay between production and testing results. During this time gap, factories face expensive problems like having to redo work, creating waste materials, and sometimes even running afoul of regulatory requirements if something goes wrong. A smarter approach comes from modern chemical engineering techniques that integrate predictive analytics directly into how things get made. These systems can actually forecast important quality factors while production is still happening. Think about things like predicting how much product will be yielded, what level of purity we'll achieve, or whether selectivity remains within acceptable ranges throughout the process rather than waiting until the end.

Hybrid Physics-Informed ML Models for Yield, Purity, and Selectivity Forecasting

When companies mix traditional chemistry principles like reaction rates and energy changes with smart computer models, they end up creating virtual replicas that can predict what happens during manufacturing processes when things change unexpectedly. Take a look at how some plants actually work this out practically. They bring together basic math about materials flowing through systems, live readings from sensors monitoring temperature, pressure levels, and acidity, plus old records about impurities found previously. Putting all this information together lets them spot problems with drug purity or worn out catalysts much faster than before - usually within around fifteen to twenty minutes. That gives operators enough warning to fix issues before products fall outside quality standards. Plants that have adopted these methods tell us their bad batches dropped by roughly forty percent, and almost no product ends up being rejected for not meeting specs according to recent industry stats. What makes these approaches different from regular AI systems is that they leave behind clear records of why decisions were made. This matters a lot for getting approval from regulators like the FDA and EMA who need to see exactly how conclusions were reached.

Overcoming Adoption Barriers: Scalable Digital Twins and Edge-Deployed Process Control

Digital twins have huge potential to change things, but getting them adopted in chemical and pharma manufacturing isn't easy. One big problem is integrating with old equipment that many plants still rely on. According to Gartner's latest report from 2025, around 60-65% of manufacturers are still working out how to make their existing systems work with new twin technologies because of compatibility issues. The reliance on cloud computing creates delays that just won't cut it when controlling reactors in real time. Plus, those fancy simulation models eat up so much processing power that they strain what most factories have available. That's where edge computing comes in handy. By running data processing right at the source instead of sending everything to the cloud, response times drop down to fractions of a second. This local processing also cuts down on bandwidth problems. What makes this approach appealing is that companies don't need to rip out all their current systems. They can start small and gradually expand as needed, which means even smaller manufacturers can get access to better process optimization without breaking the bank.

Lightweight Twin Modules for Legacy Systems and Real-Time Reactor Optimization

Digital twin modules designed to be lightweight have made it possible to work around old integration problems thanks to their compact design that fits right into existing PLCs and DCS setups. These efficient little systems run analytics right at the edge device level, constantly tweaking important factors such as how temperatures change across different points and the speed at which ingredients flow together when making APIs. When data gets processed right where it's collected, these systems respond to impurities in just 300 milliseconds, which is about 73 percent quicker compared to those relying on cloud computing according to Process Optimization Journal from 2025. What makes them stand out in chemical engineering circles is their ability to learn and adjust themselves based on what's happening inside reactors, so even if raw materials vary somewhat, product quality stays within required specs. Plants using this tech don't need expensive new hardware investments either, since tests show they maintain operation almost all the time at 99.2 percent uptime under pressure, demonstrating that older equipment actually can meet today's standards for consistent product quality.

FAQ

1. Why do inconsistencies persist in pharmaceutical manufacturing?

Inconsistencies arise due to several factors including variations in raw materials, complex processes, and reliance on traditional quality checks that only occur post-production.

2. How can AI and IIoT improve manufacturing quality?

AI and IIoT facilitate real-time monitoring, allowing instant adjustments to manufacturing processes, thus reducing errors and improving product quality immediately.

3. What role does machine learning play in API synthesis?

Machine learning algorithms optimize API synthesis by continuously adjusting process parameters, thereby reducing impurity drift and enhancing product reliability.

4. How do digital twins contribute to process optimization?

Digital twins simulate real manufacturing processes, enabling predictive analytics that forecast potential quality issues, allowing preemptive action and reducing bad batches.

5. Are these modern approaches scalable for older manufacturing systems?

Yes, lightweight twin modules and edge computing can integrate with legacy systems, offering scalable solutions without requiring extensive hardware upgrades.

Table of Contents

- Why Quality Instability Persists in Fine Chemical and Pharma Manufacturing

- Predictive Analytics: From Reactive QC to Proactive Specification Compliance

- Overcoming Adoption Barriers: Scalable Digital Twins and Edge-Deployed Process Control

-

FAQ

- 1. Why do inconsistencies persist in pharmaceutical manufacturing?

- 2. How can AI and IIoT improve manufacturing quality?

- 3. What role does machine learning play in API synthesis?

- 4. How do digital twins contribute to process optimization?

- 5. Are these modern approaches scalable for older manufacturing systems?